Recently, Carl Ford was musing about potential ideas for a WebRTC Hackathon. One idea he had was exploring different UI designs associated with “Video on Hold”. This post is a summary of our design thoughts decisions we made for a WebRTC application that is part of EnThinnai.

He felt that the design used in phone systems don’t work well for smartphones. So probably we should have different approach for video calls. As an example, he was wondering how should the user be notified when she has gone to different browser tab when the held video call is being retrieved by the other party.

In a followup post he elaborates his point. He suggests that we may imitate the idea used in 1A2 Key Systems phones. To see how far we can carry its design we need to go into a bit more detail.

These phones had some white buttons, with each one controlling a line it has access to and at most one button can be engaged. There was a red button that can place the currently engaged line on hold. All these buttons can be lit and also flash to signify the status of the line. For example, the quick flashing light will signify that there is an incoming call; a slow flashing light will signify that the line has been put on hold and a steady light will suggest an active call. Subsequently, Avaya carried over this design idea to their digital sets as well. This concept of “call appearances” and “active call appearance” is natural and very familiar in computer systems using windows. It is direct to observe that capp appearances are nothing more that open windows and active call appearance is active window. When the user selects a window to be active, the OS tacitly places other windows on hold.

But the analogy goes only so far. In a computer, even if a window is not active, activities can go on an inactive window. For example, the user may be playing a You Tube video in an inactive window. Also we should note here that 1A2 Key System phone indicates whether the local user has placed the call on hold or not; it does not know whether the far-end user has placed the call on hold or whether he is retrieving the call, which is the use case Carl wants to explore.

There is one other fundamental difference between 1A2 Key System and the environment WebRTC app will find itself. The phone can safely decide that when a call appearance becomes active, the call that was active must be placed on hold. But that may not be appropriate in the case of WebRTC. For example, the user may want to continue the call while viewing and interacting with the contents of another window. Or the user may have multiple WebRTC session going at the same time in an attempt to emulate a bridged call. So the only safe approach is to let the user explicitly select whether a video call must be placed on hold or not.

If we dig a bit deeper, we will question the basic need to place a call on hold in the first place. In PSTN systems, a call must be placed on hold if the user wants to attend another call because the access line can carry only one call at a time. But that is not the case in the case of WebRTC. The user can equivalently decide to turn off the camera or the display or both instead of placing the whole call on hold.

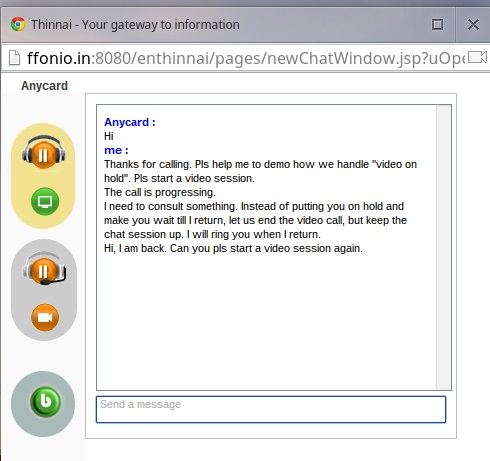

Recently, call centers have responded to frustrations expressed by callers due to excessive hold times, by introducing a feature called “callbacks” or “virtual queuing”. A webRTC app can offer a similar feature in an elegant manner by making the app a multi-modal one with the text chat session to periodically update the status and use it as a link to provide audio and video cues when an agent becomes available.

These thoughts are captured in the current user interface design used in EnThinnai:

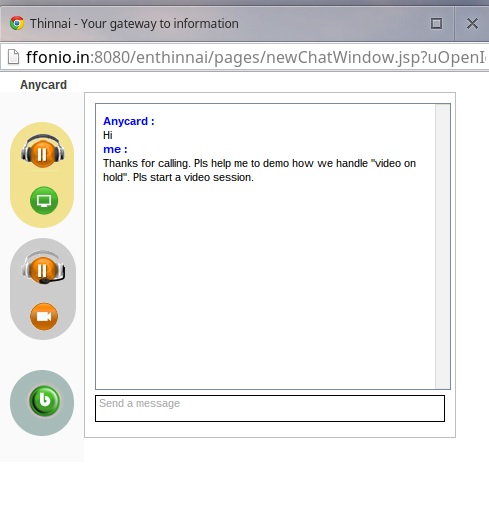

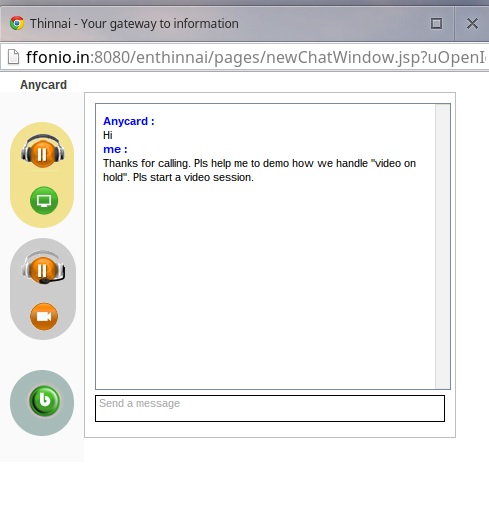

1. Screenshot of the chat window, just before start of a video call

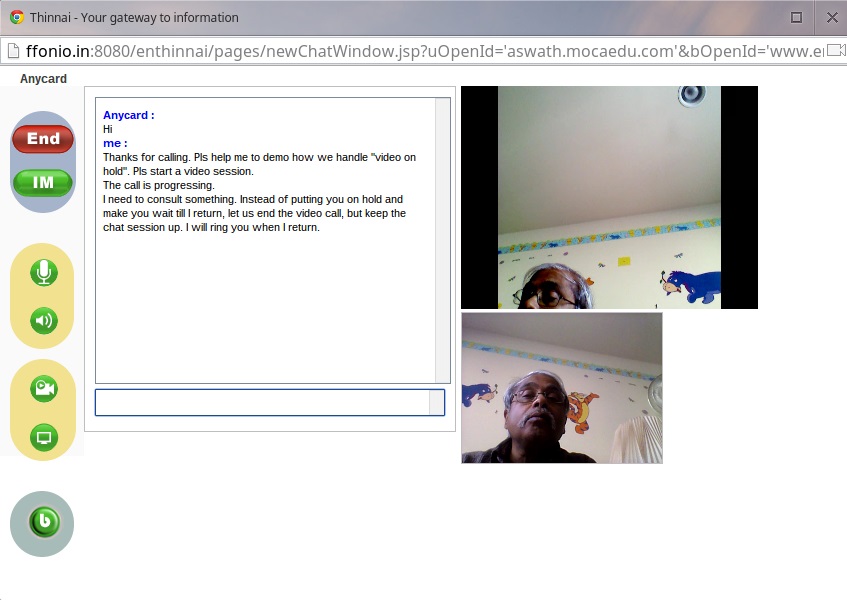

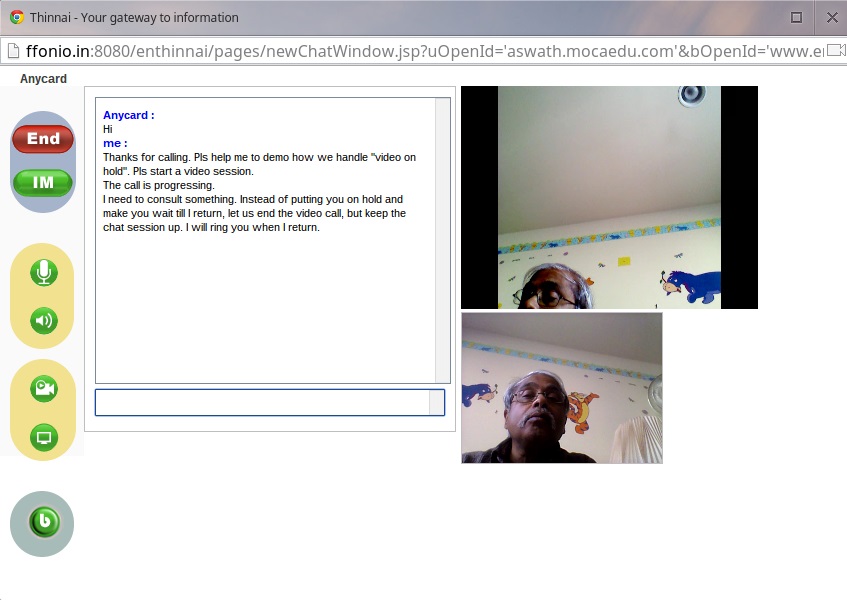

2. Screenshot during an active video call

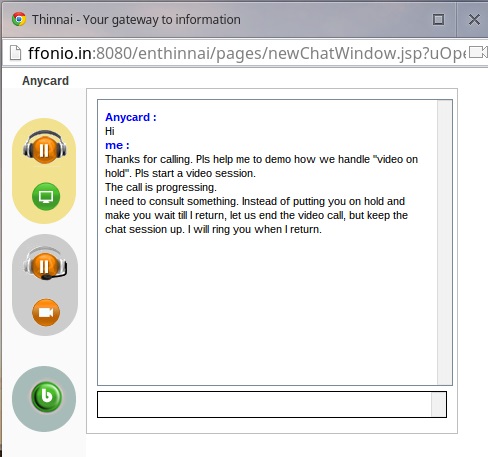

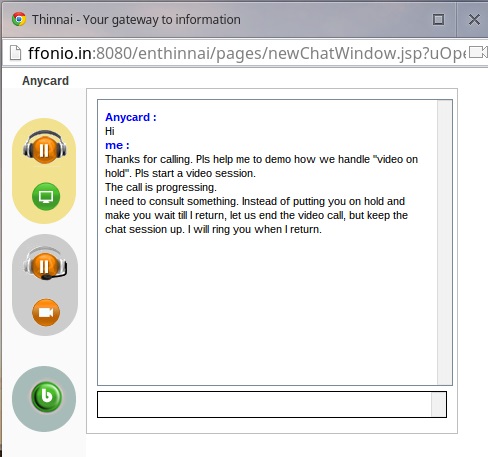

3. Screenshot of a “held” or “virtually queued” call

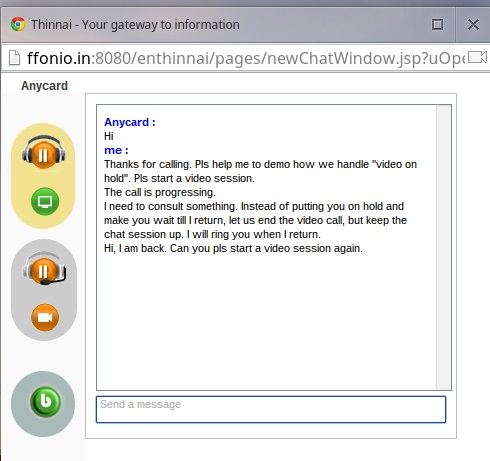

4. Far-end sends a text message and creates audio sound by pressing the “b” button